The trouble with mindfulness

Don’t get me wrong, mindful practice, self-reflective practice should all be encouraged. Being aware of the factors that affect our thinking and actively deploying de-biasing strategies to mitigate these factors can result in better decisions being made.

The trouble with mindfulness is when it’s prescribed as the sole solution to a cognitive error. You know the M&M whose take home is:

We all need to be mindful of (insert cognitive bias here) when (insert situation here). Try not to let it happen again.

But is being mindful the only way to prevent cognitive error?

When it comes to patient safety, people usually talk of the airline industry as an example of a high-reliability industry analogy but I’m going for something more relatable – the car industry. It has managed to massively lower the incidence of car accidents, large and small, over the last few decades ago despite a massive increase in the number of cars on the road despite, it’s pretty safe to say, less mindful drivers. They gave up on trying to make the average driver infallible instead accepting that people would continue to make the same cognitive errors they have always made but focused instead on making the car/roads/legislation mitigate those errors. Blind-spot warning, autonomous braking and parking, automatic lane assist, drowsy driver alerts, reversing cameras and proximity sensors, navigation aids – your current car will have dozens of such features that your med school car didn’t.

You haven’t become a more mindful driver, your car and the roads have made you a safer driver.

So much like error being either an individual or systems issue, the prevention of error can also be focused on the individual or the system.

But which approach is more effective?

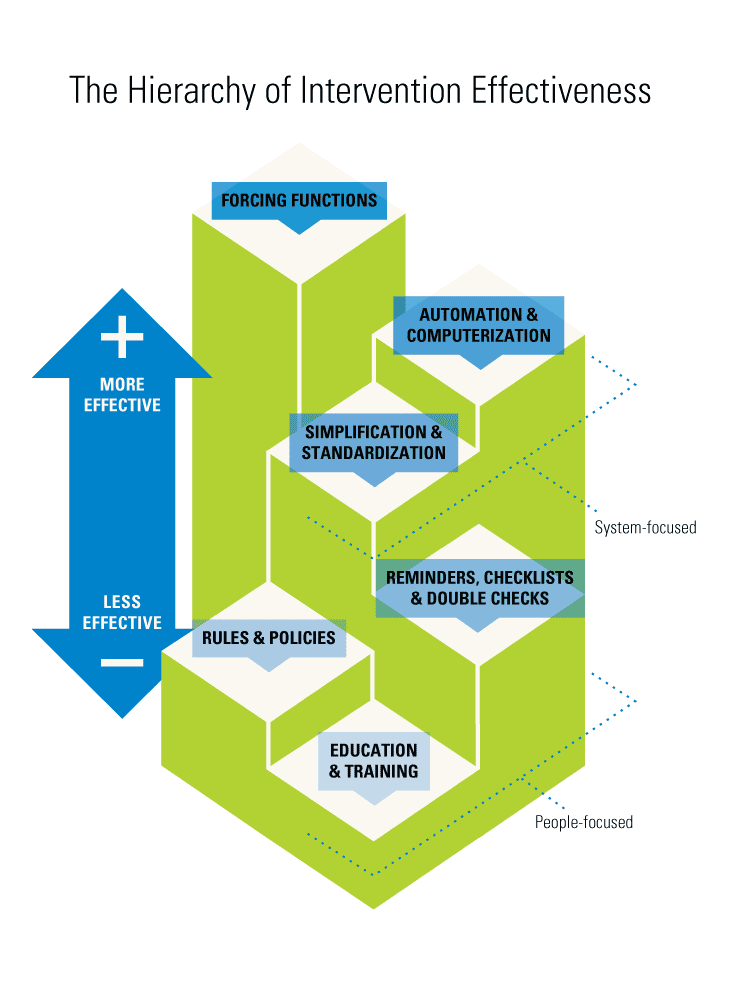

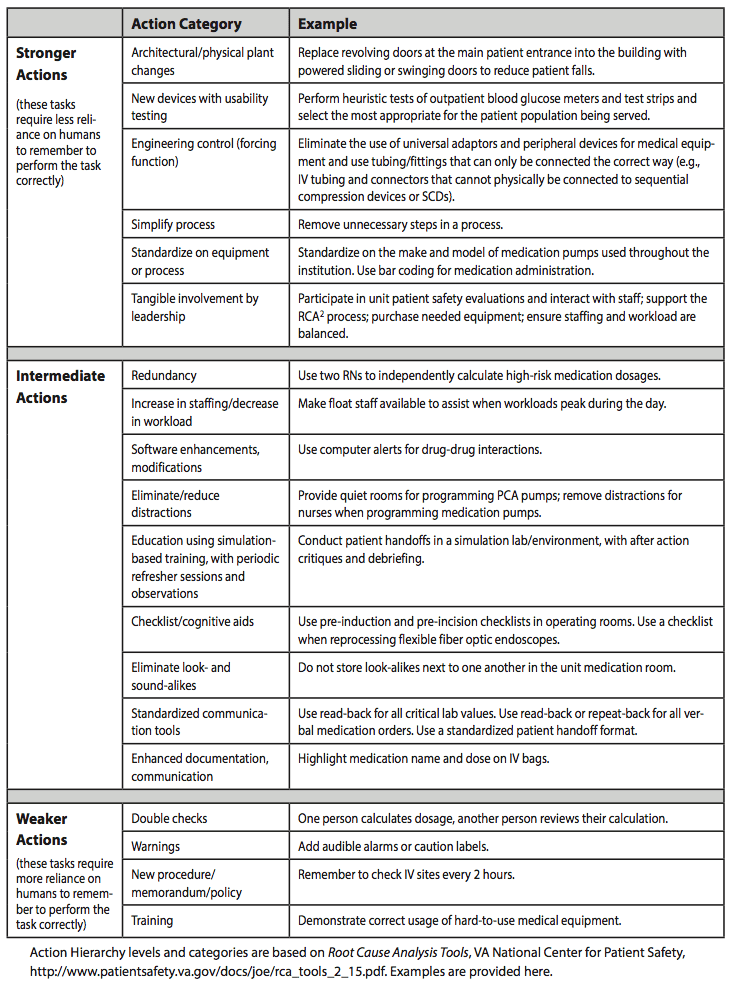

The US Department of Veterans Affairs National Centre for Patient Safety developed the Action Hierarchy (also known as the Hierarchy of Intervention Effectiveness) which was modelled on the National Institute for Occupational Safety and Health Administration’s Hierarchy of Controls which has been used for decades in many other industries to improve safety. The diagram on the left is a stylish simplification, the right a more detailed explanation.

All of this is Human Factors Ergonomics – the study and modification of the interface between the human and their environment to help people do the right thing. Crew resource Management is just one bit of HFE.

Using this you can see why that much of our traditional attempts of improvement have been ineffective and why things you probably regarded as ‘clever’ were actually both clever and effective (e.g. Broselow card & carts instead of APLS formulas in crisis, plaster slabs instead of full casts, getting rid of potassium ampoules).

Even cognitive debiasing strategies which most of us manually deploy multiple times to multiple people (when we remember) can be made more effective considering the hierarchy and by being automated into the normal workflow. For example:

- in the days of multiple bed movements during your ER stay, ambulance notes and GP referrals could be anywhere even lost. A process of these both being scanned into the SMR at triage with a link appearing in the EMR right where write your notes alerting you to their existence – it is like a blind spot warning for missed communication.

- the EMR automatically sending you a copy of the inpatient discharge summary and outpatient letter of every patient you admitted you saw. This would help you both broaden your differential diagnosis list and give you automated feedback regarding your diagnostic accuracy.

- the EMR not accepting a symptom as diagnosis and instead have ‘vomiting of unknown origin-diagnosis yet to be determined’ and then forcing you to list a differential diagnosis in order to complete your notes.

LITFL Reading

Further Reading:

SMILE

squared

Emergency physician FACEM, Melbourne Australia